Methods

Hardware

To collect sEmg signals, we used a Myo Armband from Thalmic Labs (for elaborated discussion on this topic see Myo armband). The Myo Armband provides 8 channels for sEMG recording with an 8-bit resolution and filtering was performed internally. It communicates with other devices via Bluetooth Low Energy. This interface provides 4 characteristics containing 2 sEMG recordings each. These characteristics were updated once per second.

For interfacing with Myo and performing real-time data processing and classification, we used Xiaomi Redmi 4x. The installed version of Android OS was 7.1.2.

Software

For all offline processing (cleaning, classification, feature extraction), Python 3.6 was used. ScyPy and Tensorflow 2 were used for data analysis and neural network training. Android Studios (Java) was used to implement the Android application. The TensorFlowLite package was used to provide support for a portable tflite classification format.

Gesture Set

Our design includes 2 types of gestures: finger taps (for letters A, S, D, F and whitespace, which are placed directly under the fingers when the hand is at rest) and finger extensions (for letters W, E, R and shift, which are pressed by stretching fingers). The amplitudes of the sEMG signals produced by these gestures are low, and we ensured that the hand was positioned comfortably and at rest throughout. Also, we ensured that during all experimentation the Myo Armband was placed at the same location (on the left hand, approximately 3 inches from the elbow) with the LED display facing towards the inner right arm.

Datasets

Over the course of our project, we collected and sorted datasets totalling around 80,000 samples. This dataset can be accessed and downloaded from our Github repository at here. This dataset is be divided into two parts: a training dataset and an evaluation dataset, both utilized with our neural network. Additionally, we did not utilize data that was found to be either too noisy or that did not integrate well into our interface. Our final dataset consists of 6 gestures plus the neutral position. All data is saved as *.txt files and separated into 4 groups: raw data, cleaned data, classified data, and features.

Features

To process data in real-time, we utilized a sliding window approach - that is we divided all our data into segments of a fixed size. A set of segments is combined to form a window and 6 time domain features were extracted from each window (6 features * 8 channels = 48 features). The sliding window is impemented such that the first segment is popped out of the window and another segment is added towards the end. For our design, this offset was a length of 10 ms. Features are extracted from this new window and the process is looped until the features in the final window are extracted. The features that we will observe are:

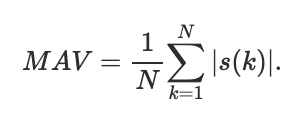

- Mean average value (MAV): average of the absolute values of the sEMG amplitudes; characterizes muscle contraction levels

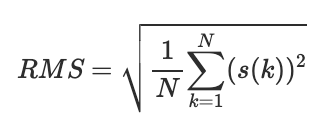

- Root mean squared (RMS): mean power of the sEMG; characterizes the activity of the muscles

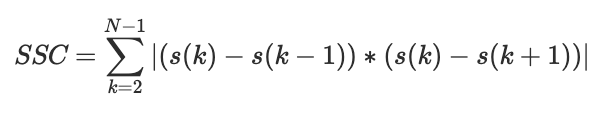

- Slope sign change (SSC): number of times the slope sign changes within the current window; characterizes the frequency information of the sEMG signal

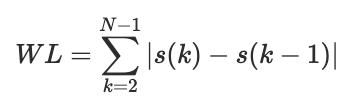

- Waveform length (WL): total wavelength of the sEMG signal; characterizes signal complexity

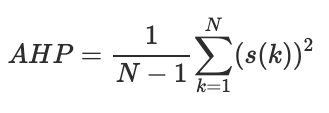

- Activity Hjorth parameter (AHP): power spectrum of the frequency domain

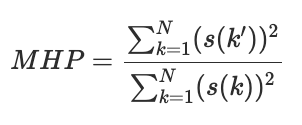

- Mobility Hjorth parameter (MHP): average frequency of the signal

Code for Data Processing

Here we provide a code samples for the classes that we created to process our data.

# class allowing to delete or replace with reference noise parts of the signal

class emg_cleaner:

# deletes "points" of points from the start

def delete_points_from_start(self, points)

# deletes "points" of points from the end

def delete_points_from_end(self, points)

# adds "points" of points before the start; these points are copied from reference signal

def add_points_to_start(self, points)

# replaces points from point "start" to point "end" by reference signal

def replace_points_in_middle(self, start, end):

# adds "points" of points after the end; these points are copied from reference signal

def add_points_to_end(self, points)

# plots all 8 channels of the signal from x_segment[0] to x_segment[1] with resolution y_scale

def mk_plots(self, x_segment, y_scale)

# saves obtained file at "address"

def save(self, address)

# This class allows to classify file

class class_editor:

# this class sets assigns "class_name" class for all samples between edges[i][0], edges[i][1]

def set_classification(self, edges, class_name)

# plots all 8 channels of the signal from x_segment[0] to x_segment[1] with resolution y_scale

def mk_plots(self, x_segment, y_scale)

# saves obtained file at "address"

def save(self, address)

# Extracts the features from the timestamped dataset from m_class_editor

# This class allows to produce file output.csv file containing 7 features

# for 8 channels of EMG signal with timestamps. Format of output data is

#

# [[[MAV1, [..., [CHP1, CLASS0,

# MAV1, ..., CHP1, CLASS1,

# ..................., ......,

# ..................., ......,

# ..................., ......,

# MAV1], ...], CHP1]], CLASSN,

class converter2:

# this method extracts features from a dataset

def process_file(self, custom_class = 1)

# saves obtained file at "address"

def save(self, address)

Here we provide a table corresponding each gesture to their class label.

| Gesture | Class |

|---|---|

| No Movement | 0 |

| Index Finger Tap | 1 |

| Middle Finger Tap | 2 |

| Ring Finger Tap | 3 |

| Pinky Finger Tap | 4 |

| Index Finger Extension | 5 |

| Middle Finger Extension | 6 |

| Ring Finger Extension | 7 |

| Thumb Tap | 8 |

| Pinky Extension | 9 |

Artifical Neural Network Architecture

Our model consists of 3 main layers: the input layer, the hidden layer, and the output layer. The input layer contains 48 nodes (consisting of the 48 total features: 8 channels, 6 features each), the hidden layer contains 24 nodes (half of the input layer), and the output layer contains 7 nodes, correlating to the total number of classified gestures. The number of nodes for the hidden layer were empirically determined given the size of our dataset and the number of input layers. Additionally, a sigmoid activation function was implemented to restrict the output of the inlaid transfer function to values between the range of 0 and 1. This range of values is then normalized through the softmax function of the output layer to create a set of probabilities of each class adding up to 1. This model demonstrated high accuracy and was chosen based upon the conclusions of outside research.